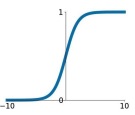

Sigmoid is the first activation function ever used. It was created to simulate the firing rate of a brain neuron.

The function squashes the input into a range of .

The sigmoid has problems:

-

If we analyze the function, we can see that the function can kill the gradients if the input is not close to (positively or negatively). Because the gradient of the function w.r.t to si defined as:

This will produce the problem of vanishing gradients.

-

Another problem is the fact that the elements in the gradient vector have all the same sign, which is bad because we only can update the weights with all positive or all negative deltas. In practice this means that we can only “zig-zag” into the optimal path, which will result in a slower convergence. This isn’t really a big problem because of mini batches, and because this happens only for each sample, so we update with different signs for each sample.

-

The third problem is the fact that the exponential is a bit expensive in terms of computation complexity.